Generative AI usage increasing across industries: KPMG report

by CM Staff

Nearly one-quarter (23 per cent) of working professionals said they are entering information about their employer (including its name) into prompts.

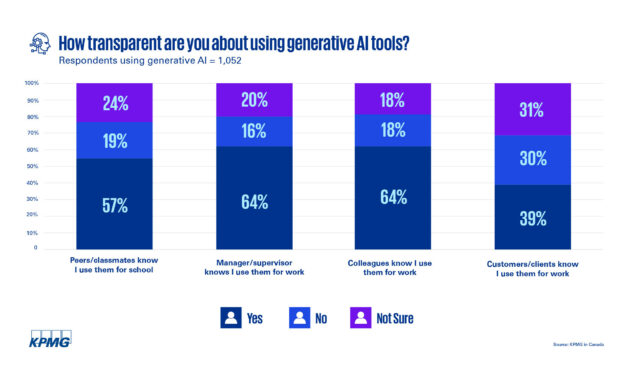

AI usage across industries (CNW Group/KPMG LLP)

TORONTO — One in five Canadians are using generative artificial intelligence (AI) tools to help them with their work or studies, finds new research from KPMG in Canada. But while many users are seeing a boost in productivity, the survey underscores the need for strong organizational controls and policies and employee education as some users are entering sensitive data into their prompts, not verifying results and claiming AI content as their own.

A survey of 5,140 Canadians found 1,052 (20 per cent) have used generative AI to help them do their jobs or schooling. The most common uses include research, generating ideas, writing essays and creating presentations. Respondents say the use of the technology has enhanced productivity and quality, created revenue and increased grades but, in the process, they are engaging in behaviour that could create risks for their employers.

“It’s absolutely critical for organizations to have clear processes and controls to prevent employees from entering sensitive information into generative AI prompts or relying on false or misleading material generated by AI,” says Zoe Willis, National Leader in Data, Digital and Analytics and partner in KPMG in Canada’s Generative AI practice. “This starts with clearly defined policies that educate your employees on the use of these tools. Organizational guardrails are essential to ensure compliance with privacy laws, client agreements and professional standards,” she adds.

The survey shows that among generative AI users, nearly one-quarter (23 per cent) of working professionals said they are entering information about their employer (including its name) into prompts, and some are even putting private financial data (10 per cent) or other proprietary information such as human resources or supply chain data (15 per cent) into their prompts.

When it comes to checking the accuracy of content generated by AI platforms, just under half (49 per cent) of users said they check every time, while 46 per cent check sometimes. Generative AI platforms have been known to produce content that’s misleading or inaccurate, often known as “hallucinations.”

“Data is an organization’s most valuable asset, and it needs to be safeguarded to prevent data leaks, privacy violations, and cybersecurity breaches, not to mention financial and reputational damage,” Ms. Willis says. “Organizations might need to look at creating proprietary generative AI models with safeguarded access to their own data – that’s a critical step to reducing the risk of sensitive data leaking out into the world and getting into the hands of potentially malicious actors.”